There are wonderful collections of prioritization techniques out there that you can read to learn about the most popular techniques and how they work. My favorite two collections are one from Folding Burritos and one from Productboard.

This collection is meant to give you some tips that are based on my real-world application of these techniques, some background info that you might not know, and show you some more techniques that you might not know about yet (at least in the context of prioritization).

Let’s start with two techniques that you have for sure heard a lot about already, and then raise the bar little by little.

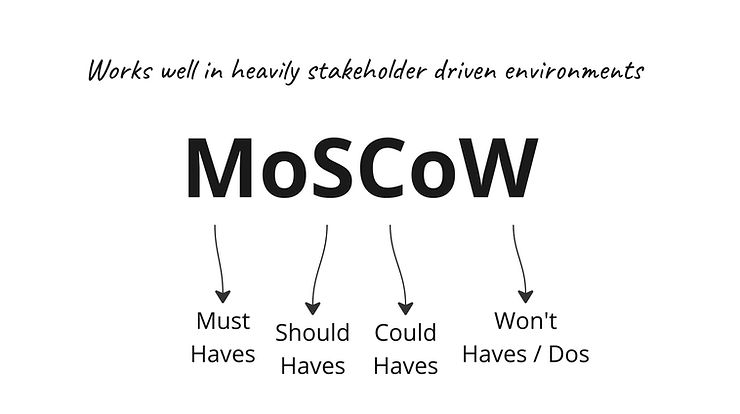

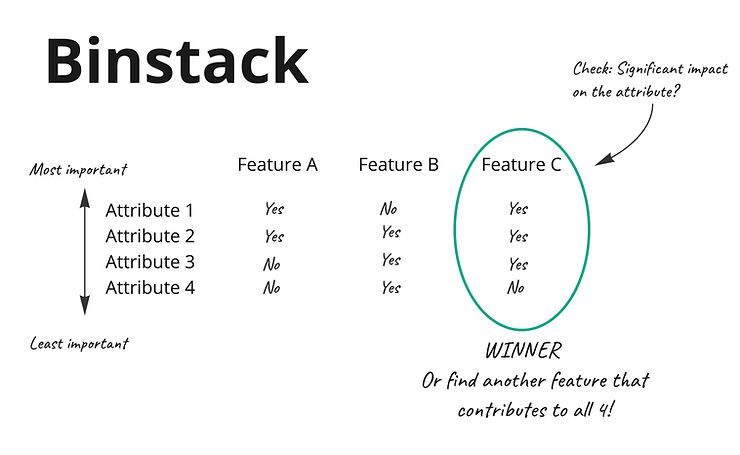

1. MoSCoW

Let’s start with the most simple, most criticized prioritization method.

The ONE use case that MoSCoW can really help you to prioritize well!

MoSCoW is NOT a good prioritization method, let’s make it clear in the beginning. It’s very subjective and most often it creates a little fight arena than a good conversation.

Suddenly everything is a must have! Based on what please?!

𝗛𝗲𝗿𝗲 𝗶𝘀 𝗼𝗻𝗲 𝘀𝗰𝗲𝗻𝗮𝗿𝗶𝗼 𝘄𝗵𝗲𝗻 𝗠𝗼𝗦𝗖𝗼𝗪 𝘄𝗼𝗿𝗸𝗲𝗱 𝘄𝗲𝗹𝗹 𝗳𝗼𝗿 𝗺𝗲 𝗶𝗻 𝘁𝗵𝗲 𝗽𝗮𝘀𝘁:

A heavily stakeholder driven organization when you have to give your stakeholders the feeling that they’re making all decisions.

𝗥𝗲𝗾𝘂𝗶𝗿𝗲𝗱 𝘀𝗸𝗶𝗹𝗹𝘀:

Moderation and patience.

𝗣𝗿𝗼𝗰𝗲𝘀𝘀:

- Define what success looks like together with stakeholders. This is very important because you need something that you can use to define the MoSCoW buckets on.

- Let stakeholders discuss items and move them into MoSCoW buckets.

- Take the Must Haves bucket and ask “If we had to ship by end of the week [𝘰𝘳 𝘵𝘰𝘮𝘰𝘳𝘳𝘰𝘸, 𝘰𝘳 𝘸𝘩𝘢𝘵𝘦𝘷𝘦𝘳 𝘧𝘦𝘦𝘭𝘴 𝘪𝘮𝘱𝘰𝘴𝘴𝘪𝘣𝘭𝘦], which of these should we ship?”

OR

Play “Buy a Feature” with the items in the Must Have bucket. - Whenever appropriate ask for input and data that backs up the arguments for the other stakeholders. Explain them that you are the moderator, and you want to help them to have a well informed discussion.

- Ask in which order should your team tackle the items and make a silent stack ranking. Discuss only items that are still moving after 2 rounds.

- Run the resulting list of Must Haves against your engineering team to check if it’s any close to be realistic or if it still needs to be cut. If it’s still too big, you’ll have to make a couple of more rounds, either quite early on or during the development (depends on your company).

- Make clear that this is only the start and things are fluid and can change while you’re constantly learning and adapting. Align stakeholders that most important is achieving the goal as defined in step 1, and that these items are our best guess today of what we need.

- Follow up with a documentation that also includes discussion points and why the group has decided in favor of and against the one or the other item.

This will probably happen across several meetings, and you’ll have to repeat the process again and again because the conversation will still be opinionated but you can guide it to your advantage.

This is NOT a good decision making process! It has nothing to do with empowered teams who use discovery and experimentation techniques to find out what’s really important. It’s even not fully agile. But there are a lot of companies who still work in this type of reality AND there are a lot of people who think binary regarding MoSCoW. Therefore, I wanted to address that MoSCow has its place.

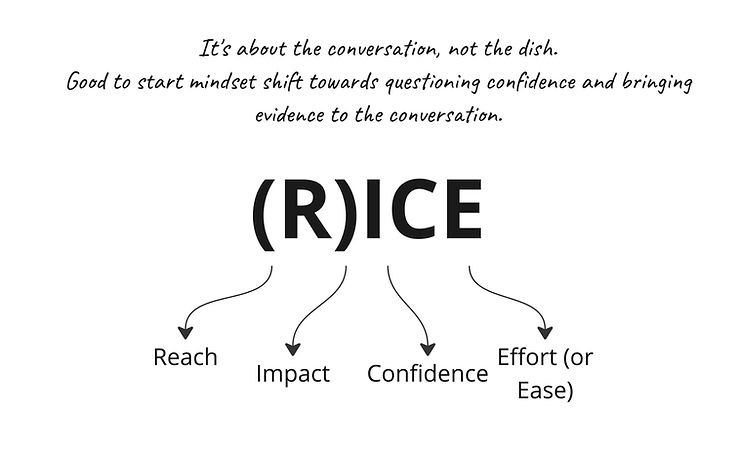

2. (R)ICE

The second most controversially discussed prioritization framework: (R)ICE

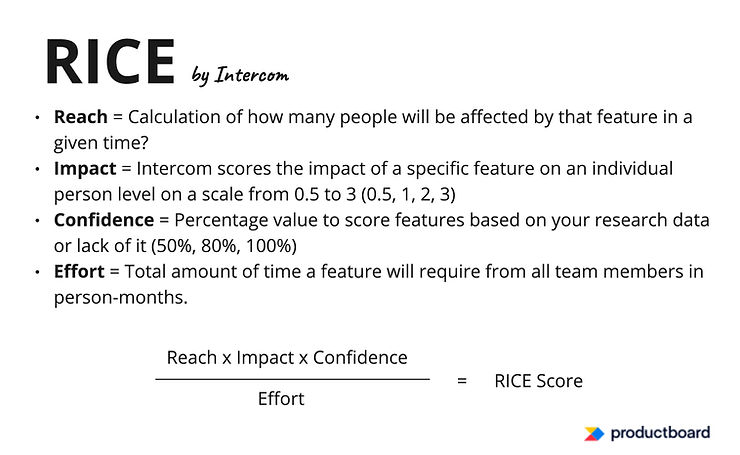

RICE = Is built by Intercom

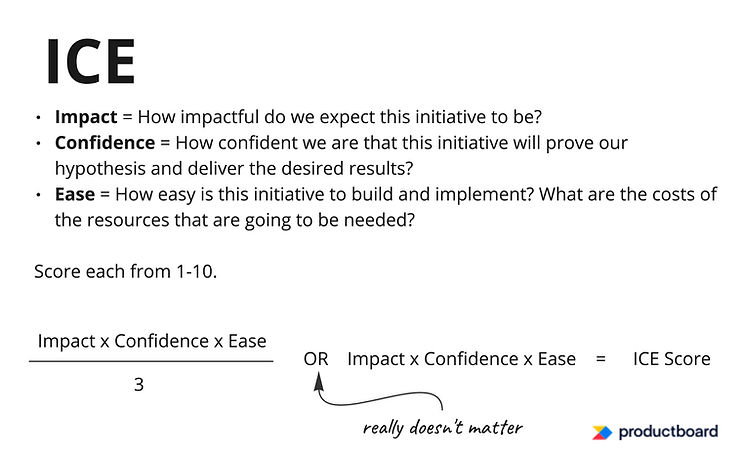

ICE = Is even more straightforward

Source of description in images: Productboard

As any other scoring method, (R)ICE is being criticized of being pseudo quantitative because the scoring is heavily subjective and can be tricked. And this is right. Unless you add calculations that are based on experiments.

It’s still is a very good method to start and focus the conversation on confidence with the aim of getting more data and evidence behind prioritization.

What sounds easy is in fact not. As soon as you start discussing the score of the features your managers will say “this is a random score”. You can argue with data but it’s still random. Therefore, you should score any scoring method with your team to a) get different perspectives into the scoring, and more importantly b) to start a conversation that goes beyond pure guesses and strong opinions.

𝗨𝘀𝗲 𝗖𝗮𝘀𝗲𝘀:

- Your prioritization is opinionated and you want to slowly turn the conversation around to focus on evidence.

- Your prioritization is already quite evidence based, your team/company is grooved in to what “Impact” really means, and you only need a tool that helps with quick decision making.

𝗠𝗮𝗶𝗻 𝗚𝗼𝗮𝗹:

Make a decision which feature needs research, analysis or experiments to get more evidence behind the assumptions on impact. Start conversations that go beyond opinions and guesses.

𝗠𝗮𝗶𝗻 𝗦𝗸𝗶𝗹𝗹𝘀:

Negotiation to get beyond “this is random”, communication to cruise along the evidence conversation, patience to turn around the conversation.

𝗣𝗿𝗼𝗰𝗲𝘀𝘀:

Align on what “Impact” means. Very important! I usually break it down to the current time horizon’s (quarter, year, etc.) business goals and customer outcomes. Could also be your OKRs.

- 👉 Whatever it is, is SHOULD come from the product strategy. If there’s no clear product strategy, here is a workaround.

- Define how you rate confidence. High confidence should only be possible when you have real market feedback! I recommend Itamar Gilad‘s confidence meter. You can adjust it to your needs.

- Score each factor with your team. You can also leave the “E” empty because at this step we’re not trying to find quick wins vs. big projects.

- Have a conversation with others as well and adjust. It’s about the conversation!!!

- You can mark the ones with a low C and high I as “needs evidence” and plan in whatever needs to be done for that (analysis, research, experiments). For the others you can get estimates for the “E”.

Looks very easy but really it’s random. And therefore it’s more about the conversation!!!

And no it’s not a scientific or pure quantitative method.

And if it helps here’s a video of how I used it at Doodle.

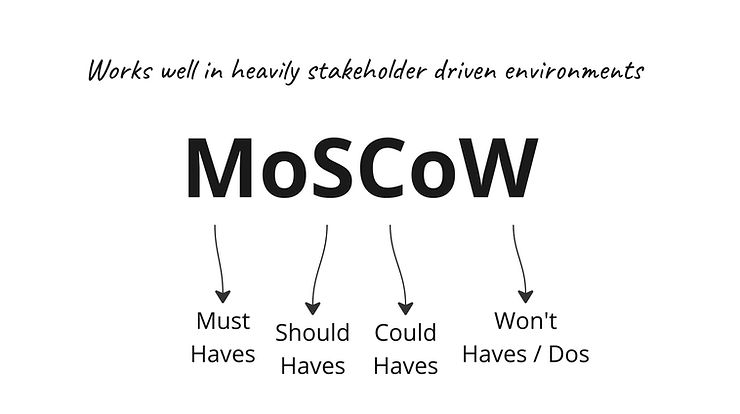

3. Binstack

Game changer!

This is one of those things that you do without knowing it is a thing. I’ll be honest with you I only learned it has a name a couple of weeks ago. Thanks Oleksander for the link.

Here’s the link to a great description: https://longform.asmartbear.com/maximized-decision/

Basically you stack rank the most important measurable factors (in the article called “attributes”) that you want to achieve, and then compare your features against those factors. Instead of saying “Feature A supports 4 out of 5 factors, feature B only 3, so we’ll go with feature A” you say “Feature A supports factors 1 and 2 but not 3 but feature B supports factors 1, 2 and 3, so we’ll go with feature B.”

𝗨𝘀𝗲 𝗰𝗮𝘀𝗲𝘀:

- When goals and product strategy are clear, this is easier. And analytics need to be in place! This method relies on measurements!

- When they are not clear, it can be difficult to get a sorting into your most important factors. Suddenly they are all equally important and you want them all at the same time. It might still work on an encapsulated feature level though.

𝗦𝗸𝗶𝗹𝗹𝘀:

Abstraction and simplification, understanding of priorities, reliable bias filter

𝗣𝗿𝗼𝗰𝗲𝘀𝘀:

Attention: I’m not explaining the article! I’m describing what I did in the past that sounds like in the article but is still different.

- You collect the most important measurable factors of your product. Keep it short!

In my case, I had collected user outcomes that we wanted to achieve with some initiatives. For example, this year we must get more users to sign up/use feature x/subscribe. This would be 3 outcomes.

On a strategic level, it could be business outcomes instead. E.g. new products often focus the first year on growth: Business outcomes could be more customers (market share), more brand awareness (top of mind), customer happiness through customer onboarding, customer happiness through UX. - Stack rank the factors from most important at the top to least important on the button, e.g.

- Signup

- Subscription

- Feature adoption

This is for sure more difficult on strategic level.

- Define what “significant move” means and check which features in the list will significantly move each factor

- Dismiss features that don’t support the top factor, then those that don’t support the second factor, then third factor, etc.

- Prioritize remaining features

𝗧𝗵𝗲 𝗱𝗶𝗳𝗳𝗶𝗰𝘂𝗹𝘁𝗶𝗲𝘀:

Agreeing on the factors

Agreeing on the ranking of the factors

Agreeing on what significant really means

Agreeing on the contributions to the factors

Having the balls to say “no” and dismiss features entirely

It’s a bit more time consuming and therefore difficult to get people do it again and again. But once you’ve set up your process and are grooved it, it’s really nice.

I recommend to check against confidence and run experiments to increase your confidence level! I regretted that I didn’t 🙂

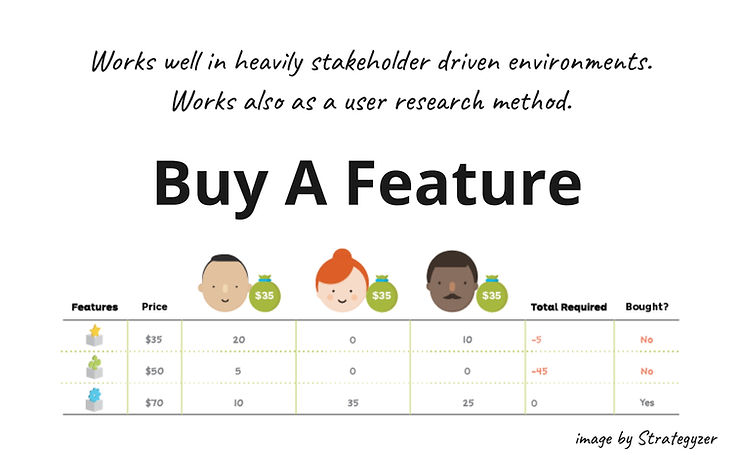

4. Buy A Feature

Which feature would you spend your last penny on?

Buy a Feature is an innovation game. Innovation Games is a book by Luke Hohmann with lots of different collaborative plays that help you make decisions collaboratively. There are plays for making product decisions, others for user research, team or stakeholder alignment, retros, etc., and generally you can apply many of them to different situations. The famous Speed Boat and Prune the Product Tree exercises are also innovation games.

𝗙𝗶𝗿𝘀𝘁 𝘁𝗵𝗶𝗻𝗴𝘀 𝗳𝗶𝗿𝘀𝘁: Buy A Feature originally was created as a form of user research to understand what customers see as priority and shift the attention from internal prioritization. In this context, it’s a great technique.

However, if you want to use it as an internal prioritization technique note that this is NOT a precise prioritization technique either – UNLESS you can moderate it in a way that stakeholders base their arguments on data, insights, facts and can spot assumptions. So, in a heavily stakeholder (SH) driven environment it makes SHs think in a different way (data and insights) and keeps the conversation focused.

Let me first explain how it works, then I’ll tell you better what I mean.

𝗦𝗸𝗶𝗹𝗹𝘀:

Moderation

𝗨𝘀𝗲 𝗖𝗮𝘀𝗲𝘀:

- SH driven environment where agile stands for “efficient delivery of what we want”

- User research. A better form of stack ranking.

Process for internal prioritization:

- Think how many SHs you want to invite to the prio workshop.

- Make a list of features or improvements you want to be ranked and short explanations.

- Add a price tag to each one, e.g. $5 per person-day, or T-Shirt sizes S=$50, M=$100, L=$150 (or however you want to price it). The price stands for the effort that it takes to build the feature.

- Calculate the sum of all items. Let’s say it’s $1200. Take 1/4 ($300) or 1/3 of that as total budget for the workshop.

- Divide the budget by the number of SHs. Let’s say $300/5 SHs = $60

- The cheapest feature should not be buyable by a single SH. So adjust your prices accordingly.

- In a 1h workshop distribute virtual $60 to the SHs, explain the features and give the instructions to buy the features that they believe the customers want to be built next. Nobody is able to buy a feature by themselves. They need to collaborate to buy features, and for this they need to argue with insights and data to make their points why the customers would want this.

This way you get a sorted list of features that are desired to be built.

For external prioritization (User Research):

Play the same game above with a number of people that match your target group and let them stack rank collaboratively the resulting list.

Twist: Give them compensation money upfront ($20 gift card or cash or whatever) and tell them to use this to buy features.

Twist of the twist for either case: Instead of buying, you can ask them to invest into the feature that they believe would multiply their money.

The conversation now moves from silos & egos more towards thinking in impacts from the customer’s point of view.

Regarding internal prioritization: It’s still subjective and biased, everyone argues from their perspective and maybe without data. But when you can make them focus on customer impact, this method can help you to get beyond “I want”. Even better when you can make them talk business and customer impact but in these environments business impact is typically already forefront.

Regard external prioritization: Make the users explain their investment. Understanding the Why behind their purchase decisions is way more important than the purchase decision alone without any context.

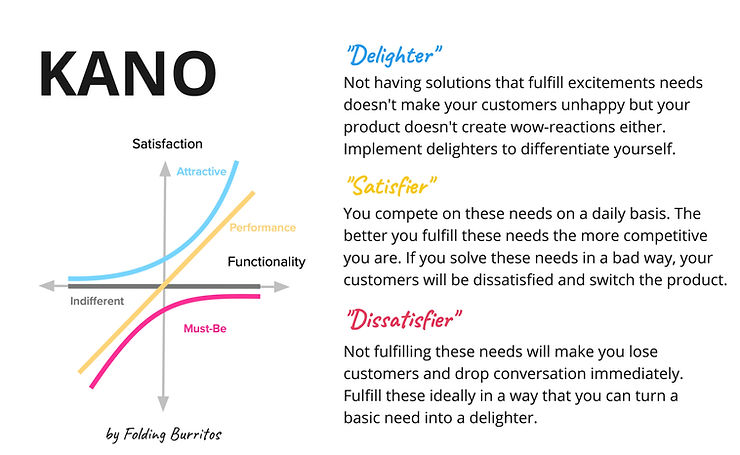

5. KANO model

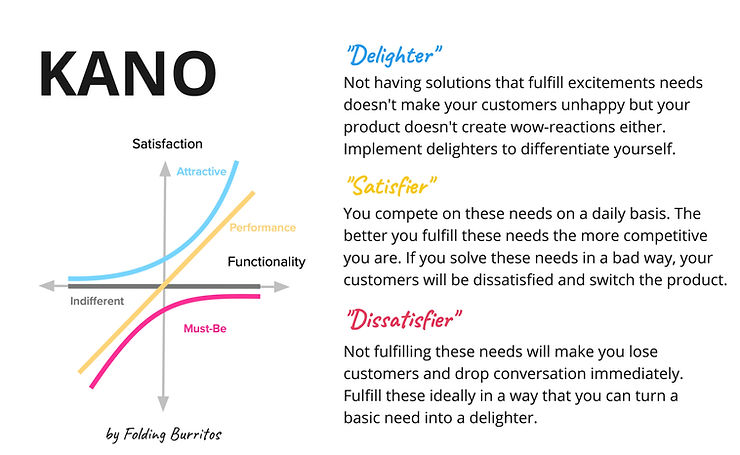

KANO model is NOT a prioritization method!

I learned about the KANO model 20 years ago in Customer Behavior and SIX Sigma lectures at the university. It’s originally a qualitative (!) research model that helps classify customer preferences for a product or service for unique positioning in the market. It helps you to eliminate waste and focus on what’s necessary, but it’s not a prioritization model.

𝗛𝗶𝘀𝘁𝗼𝗿𝘆:

The model was created in the 1980s by Noriaki Kano, a professor of quality management at the Tokyo University of Science. He was researching which factors of a product satisfy customers and make them loyal. He made Toyota employees “leave the building” and observe customers in their daily environments and while using the product.

𝗘𝘅𝗰𝘂𝗿𝘀𝗶𝗼𝗻:

Sounds familiar? Yes, Jobs to be Done 😉

To think in JTBD & KANO, one example is iPhone’s power button. It doesn’t exist. Why? Because “having a power button” is not a basic need. “Turning the phone on and off” is a basic need that can be solved in a different way so that the design became mind-blowing.

𝗕𝗮𝗰𝗸 𝘁𝗼 𝗞𝗔𝗡𝗢:

Then they were supposed to exchange on the observations, eliminate what’s not needed and find those factors that are really important. In order to do this, they categorized their findings into 5 categories: attractive, performance, indifferent, must-be and undesired.

👉 Nowadays we tend to look at only 3 categories:

- Attractive, Excitement needs, or “Delighters”:

These are things that people don’t know they want it until they get it. They don’t expect these features and are positively surprised. These have a potential to create hype around your product. - Performance needs, or “Satisfiers”:

The better this feature works for your customers, the more satisfied they are. But the worse it works, the more dissatisfied they are and will switch to another product. You want to get these right because in day to day usage you compete on these features! - Basic needs or “Dissatisfiers”:

If you don’t satisfy these needs, your customers will be pissed but they won’t be happier the better you solve it for them. Again, turning a phone on and off is a basic need but does it make me happier that I don’t have an extra button for that? No. I don’t care. But it creates a cleaner design in return, which delights or satisfies me.

Over time, a functionality moves from delighter to satisfier to dissatisfier, which is a natural evolution. For example, airbags in cars were once a big thing. Then all car manufacturers added airbags to their cars. They competed on airbags, building them all around the car, smaller, bigger, less damaging in case of an accident, etc. But now they are a standard feature, and you don’t prefer one car over another because it has airbags. You expect it to have airbags.

You can also create a survey to classify your existing features but it’s difficult to get reliable answers for your delighters. Because delighters are difficult to point at. If they’re able to point them out they might have already turned into satisfiers.

To get started, here’s a full guide on KANO model by Folding Burritos. It’s very comprehensive and also includes how to run the survey and analyse the results.

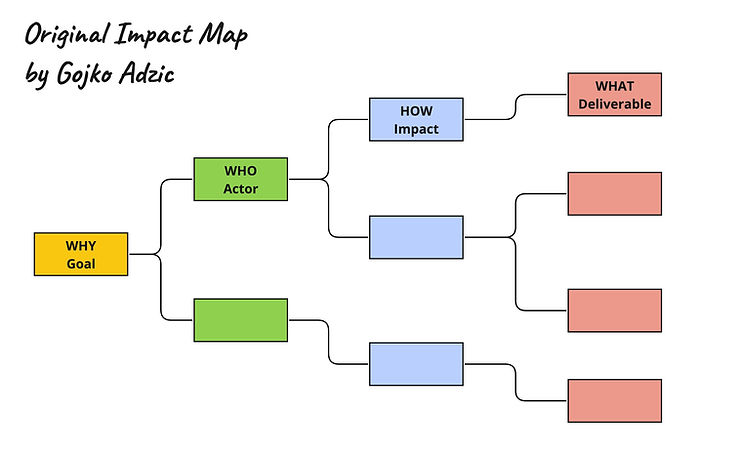

6. Impact Mapping

I love Impact Mapping because it’s helpful in so many ways – even with prioritization!

Like the KANO model, Impact Mapping is not a prioritization method in itself but it can help you to determine relevant features in the first place which you can further evaluate for prioritization.

What does “relevant” mean in this context?

As product people we define products that create both user value (desirable) and business value (viable). We get flooded with requests or ideas that look random. How can we pick the right ones to further evaluate, so that we have high confidence right from the beginning that our ideas are aligned with business goals and product strategy?

✨✨✨ Enter the magic world of Impact Mapping ✨✨✨

Impact Mapping, invented by Gojko Adzic, “is a lightweight, collaborative planning technique for teams that want to make a big impact with software products. […] Impact maps help delivery teams and stakeholders visualise roadmaps, explain how deliverables connect to user needs, and communicate how user outcomes relate to higher level organisational goals.” – impactmapping.org

It has been widely adopted in software product and project management. Nowadays, Impact Maps guide discovery, OKRs, Opportunity Solution Trees, and more (see image). They help eliminate non-aligned elements and focus on relevant features, tasks, ideas, and conversations that contribute to business goals and user outcomes.

𝗨𝘀𝗲 𝗰𝗮𝘀𝗲𝘀:

There are SO MANY cases you do an Impact Map for, I can’t list them all. In prioritization some cases are:

- check your roadmap / backlog / idea bank against business goals every now and then

- ideate/select solutions (initiatives or features) for relevant Outcomes that you describe in OKRs

- find outcomes that pay into business goals in the first place

- focus only on outcomes and solutions that serve a specific persona’s needs (the Apple way)

- …

𝗦𝗸𝗶𝗹𝗹𝘀

Facilitation, moderation, ideation, strategic thinking, discovery/analytical thinking

𝗣𝗿𝗼𝗰𝗲𝘀𝘀

- Goal: Start from a business or product goal you want to achieve

- Actors: Identify actors that can have a pos. or neg. impact on achieving our goal. Pick the most impactful actors.

- Impact: Identify behaviors that we want the actors to show. Write them in outcomes. Use adjectives and superlatives to articulate the improvement. Pick outcomes that potentially have the highest business & user value.

- Deliverables: Ideate solutions that will help you reach these outcomes. Identify the ones that potentially have high user impact. Identify which ones you want to test, consider to plan in, or reject.

👆This is easy but not simple. Group mapping requires strong moderation skills to avoid getting lost in discussions. Writing effective outcomes can be challenging, but there are helpful tricks available.

Note: There are lots of nuances to an Impact Map that I haven’t mentioned in this post. But I keep writing about Impact Mapping.

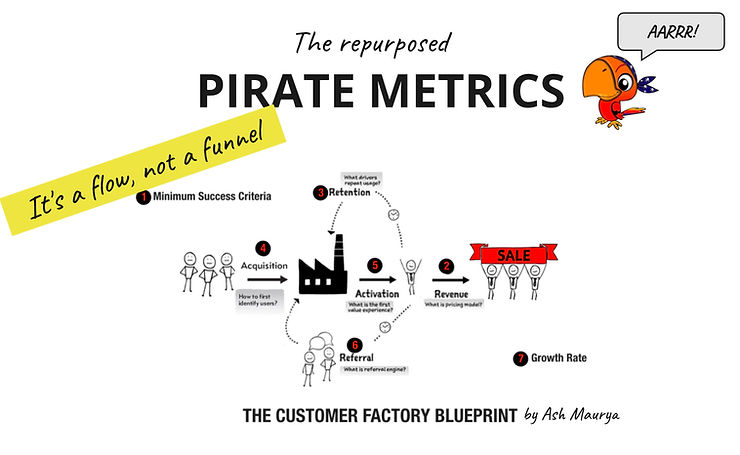

7. Pirate Metrics

In the bucket of pre-prioritization (like KANO and Impact Mapping) a hidden champion. Everybody knows this framework but most people underestimate its varieties and power.

Repurposed Pirate Metrics!

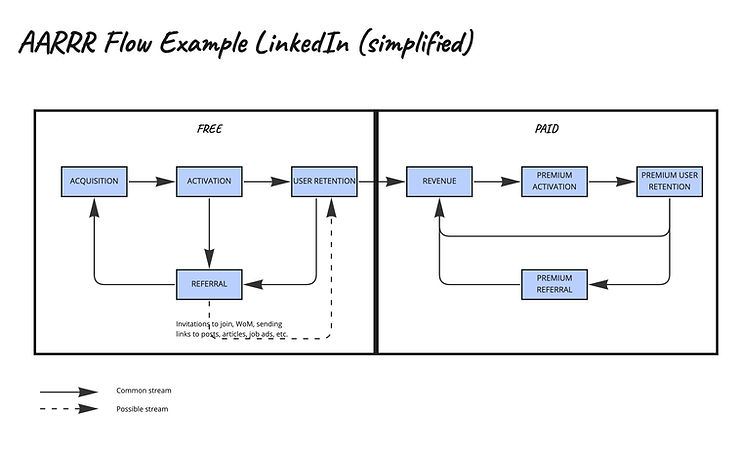

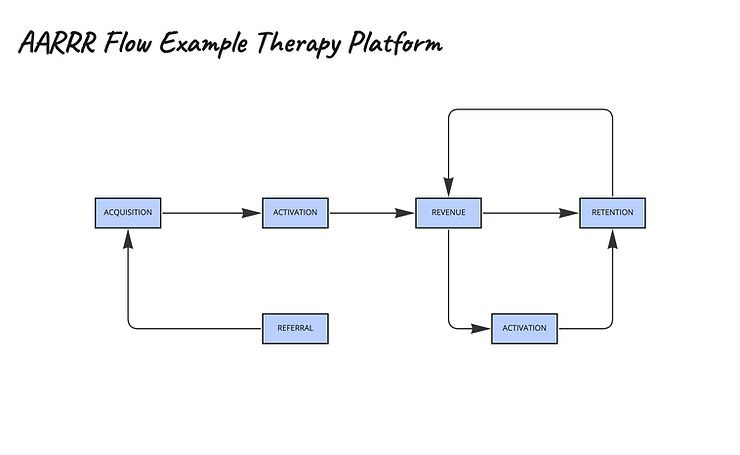

AARRR as a flow, not a funnel!

Like most of us, I’ve been introduced to Dave McClure‘s AARRR as a funnel (watch this video or see his slides). In an exercise at Doodle we mapped our product to AARRR and built a flow. We spotted what our product was missing and we defined success metrics per stage. Based on our findings and the strategic direction, we decided which step to improve next and set our focus on in the next quarter. A very easy way to connect product strategy with the tactical improvements to prioritize your next actions and identify only those ideas that contribute to the focus selected.

Then I went to a meetup where I saw Ash Maurya‘s Customer Factory Blueprint the first time and thought “hey, this is a thing”. Then I read his article about it. And here is more about the systems side of the customer factory.

Ever since this is were I start when a product team or person comes with a challenge like

- I need to screen our ideas and pick the ones that improve our product but I don’t know where to start

- Our product strategy isn’t clear, how can I decide what to build next

- How can I find out which metrics to focus on and improve, how do I find relevant metrics at all

Not to say that the traction model he built based on the customer factory is what I check my own ideas against (because behind the scenes I’m working on a couple of ideas but don’t tell anyone 🤫)

𝗨𝘀𝗲 𝗖𝗮𝘀𝗲𝘀 in the context of prioritization 👆

𝗦𝗸𝗶𝗹𝗹𝘀

Collaboration, analytical thinking, customer journey analysis, a bit of business model knowledge

𝗣𝗿𝗼𝗰𝗲𝘀𝘀 for prioritization

Attention: Though I’m a huge fan of the Customer Factory Blueprint and its general wisdom on the business model and traction calculation side, I personally believe that every product’s AARRR flow looks different.

- Discuss your customer’s journey in your team and define how your product’s AARRR flow looks like

- Map your product’s pages or functionality to your AARRR flow

- Identify relevant metrics per stage, analyse product performance according to the metrics and spot where the biggest lever for improvement is

OR (if analytics isn’t set up properly)

based on other input and team conversation create a common understanding in which stage you see the the biggest lever for improvement - Identify solutions that help improve the stage that you have agreed to focus on

You can do a lot more, e.g. spotting what’s missing to close the loop like I describe at the top. I’m just showing one use case here.

ALSO, you can extend the AARRR flow by taking focus points and stages in like Engagement, Habit Moments, etc. to make metrics, conversations, focus points, etc. explicit about these.

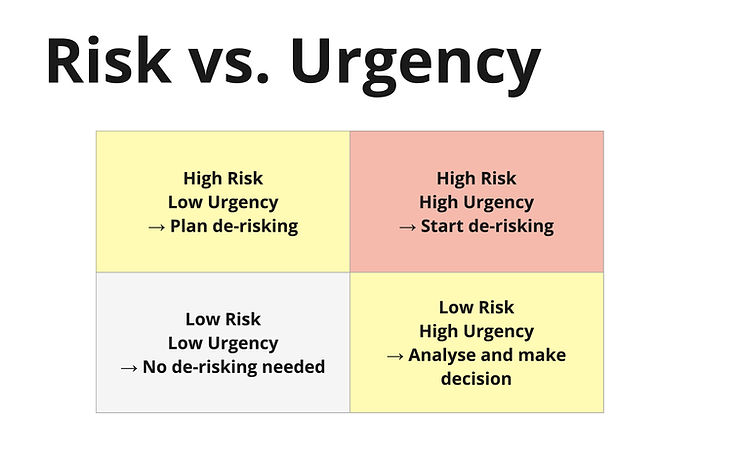

8. Risk Assessment

Have you ever shipped something without exploring if it’s maybe harmful? 🙋🏻♀️

Ha! We are all guilty!

That’s what all of us have done already at least once in our career. But de-risking is one of the duties that we have as product people. So how do we do that?

𝗖𝗼𝗻𝘁𝗶𝗻𝘂𝗶𝗻𝗴 𝘁𝗵𝗲 𝗽𝗿𝗶𝗼𝗿𝗶𝘁𝗶𝘇𝗮𝘁𝗶𝗼𝗻 𝘀𝗲𝗿𝗶𝗲𝘀 𝘄𝗶𝘁𝗵 𝗿𝗶𝘀𝗸 𝗮𝘀𝘀𝗲𝘀𝘀𝗺𝗲𝗻𝘁.

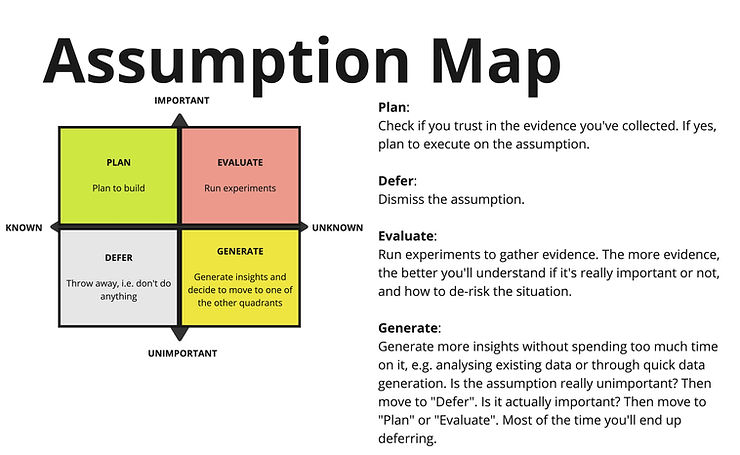

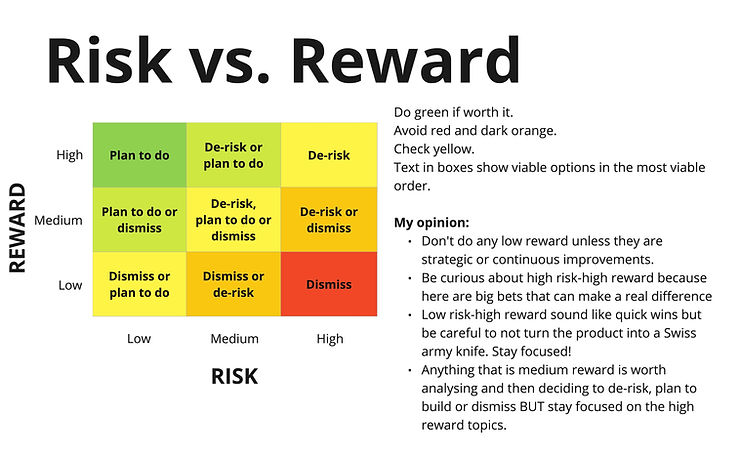

There are several methods that help you to assess risk of an idea. In this post I’m not going to share a process but multiple risk assessment methods in the images.

One way to add it is to use these risk assessment techniques and start to experiment with the riskiest items to de-risk them. Another way is to add a risk score to whatever scoring you do and weight it high so that the most risky items always appear at the top of your list.

1. 𝗔𝘀𝘀𝘂𝗺𝗽𝘁𝗶𝗼𝗻 𝗠𝗮𝗽:

- Identify your assumptions about the idea

- Map assumptions against Importance & Unknown.

- Importance: How important is it for the business case that the assumption turns out to be true.

👉 Ask: What will happen if the assumption turns out to be false?

Nothing → Low importance

We’d need to adjust the concept but the general direction is right → Medium importance

It will break the whole concept → High importance. - Unknown: How much evidence do we have that the assumption is right?

Little → high unknown

A lot → low unknown

2. 𝗥𝗶𝘀𝗸 𝘃𝘀. 𝗥𝗲𝘄𝗮𝗿𝗱

- Map the idea against “How risky is the idea” (e.g. highly complex, a lot of unknowns, new territory, etc.) vs.

- “How big is the reward if we get this right”? (e.g. winning a new market vs. gaining only little more market share in existing market).

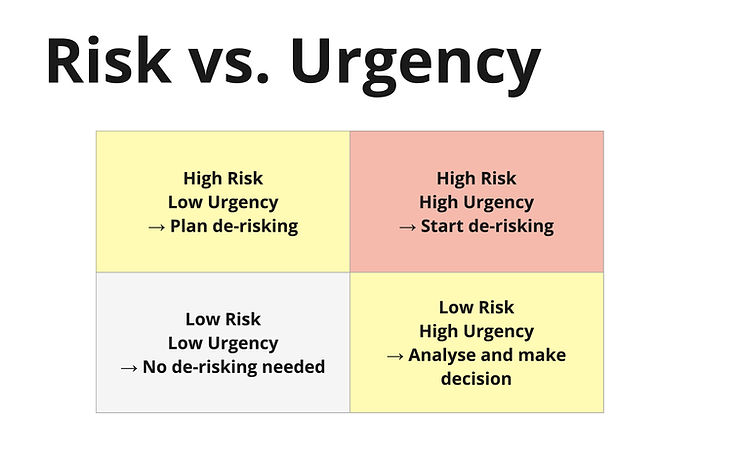

3. 𝗥𝗶𝘀𝗸 𝘃𝘀. 𝗨𝗿𝗴𝗲𝗻𝗰𝘆

- Risk: “How risky is the idea” (e.g. highly complex, a lot of unknowns, new territory, etc.) vs.

- Urgency: “How urgently do we need results of experiments to move on with our idea?” aka can this idea wait a little longer because in the overall strategic context it’s not an urgent idea at the moment.

- Something that is of low risk and high urgency should be checked again because it’s weird that something urgent is not risky. Why would we urgently need answer for that? So either a short analysis is enough to decide if it’s really low risk and therefore doesn’t need to be tested. Or you will notice that it’s actually high risk and then you decide again how urgently need an answer and if you want to run an experiment.

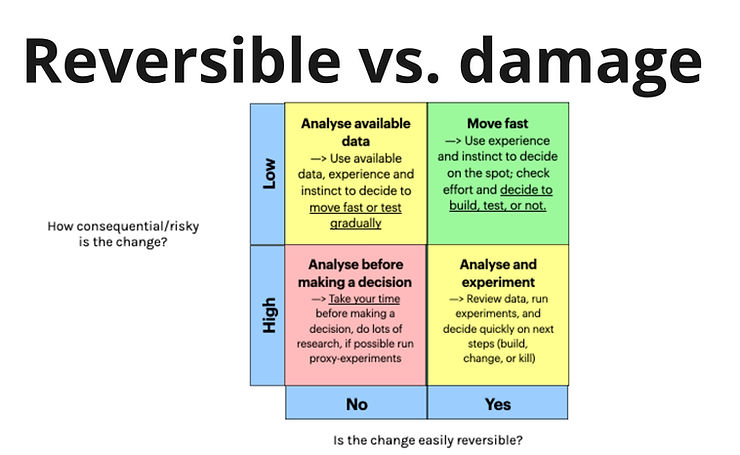

4. 𝗥𝗲𝘃𝗲𝗿𝘀𝗶𝗯𝗹𝗲 𝗼𝗿 𝗻𝗼𝘁

- This has become known as “1-way & 2-way door decision” but it actually has some more nuances

- Ask: Is the change easily reversible y/n? And how consequential/risky is the idea change?

- 👉 Color changes are easily revertible. Features are maybe not so easy to revert but the risk isn’t that high. Ideally you have feature flags in place, in that case most changes are easily revertible. But changes like big mergers are not easily reversible and are extremely risky. You want to take your time to de-risk and analyse as much as possible before making a decision.

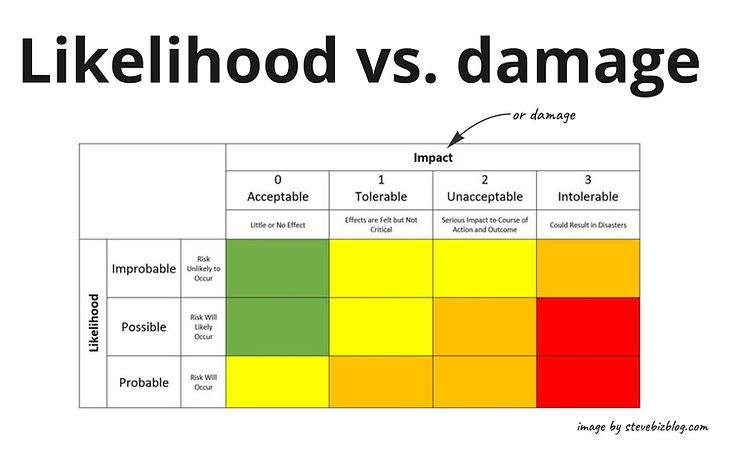

5. 𝗟𝗶𝗸𝗲𝗹𝗶𝗵𝗼𝗼𝗱 𝘃𝘀. 𝗱𝗮𝗺𝗮𝗴𝗲 𝗽𝗲𝗿 𝗿𝗶𝘀𝗸

- How likely is it that we will face this risk?

- When this risk happens, how much damage will it cause us?

- You can also prioritize bugs with this matrix.

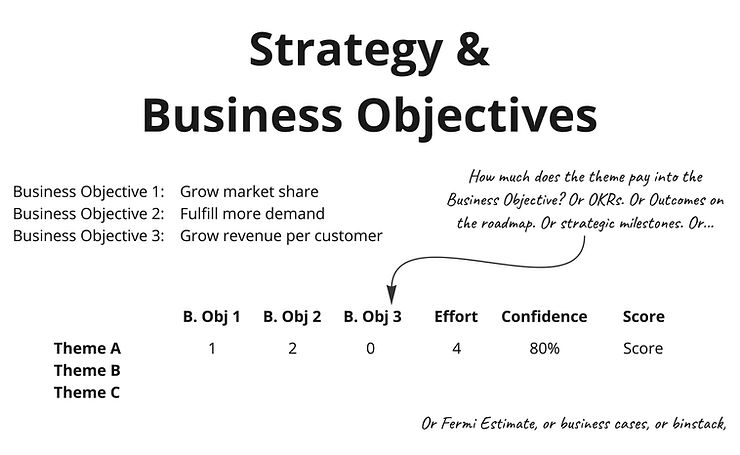

9. Strategy & Business Objectives

“Stop telling me I should prioritize based on strategy! Tell me how to do it instead!”

Your wish is my command.

Here is 𝗢𝗡𝗘 way how you can prioritize initiatives, themes, features (whatever level you work on) against strategic objectives.

𝗦𝗸𝗶𝗹𝗹𝘀

Analytics, communication, negotiation, influence, strategic thinking, sense-making

𝗨𝘀𝗲 𝗖𝗮𝘀𝗲𝘀

Especially for strategic roadmapping, but in general this is useful on any level

𝗣𝗿𝗼𝗰𝗲𝘀𝘀

- Get clarity on objectives for your product! These might be the time horizon’s business objectives, OKRs, outcomes or milestones on the roadmap, stepstones to achieve, etc. Or from the different jobs to be done, pick one to focus on.

You need an objective or JTBD to work towards! - Map the topics (ideally themes) against their impact on the strategic objective or JTBD. If you want to score it, then score it, but make at an informed scoring. Meaning: Write a business case based on experiment results, make a Femi Estimation, get numbers from your analytics, input from your qualitative research, etc.

OR you can use binstacking to qualify only those themes that pay into all objectives, if that’s what you want and need in that case (needs clarification from your manager). - Pick the most promising themes.

𝗧𝗵𝗮𝘁’𝘀 𝗶𝘁.

You see, prioritization against strategic objectives is NOT complex.

It’s way more straight forward than any other prioritization method.

The only reasons why it feels difficult is

a) because it has the word “strategy” in there, and

b) strategy is maybe not clear in your company or

c) you have to create a strategy but you are in the very beginning of the product lifecycle and don’t have enough input to set one.

In case of c) I recommend to use milestones instead, as an example something like “in 3 months from now we have found a problem worth solving for our target group”.

10. Further readings

My two go to guides on prioritization methods are these ones:

- Folding Burritos: https://foldingburritos.com/blog/product-prioritization-techniques/

- Productboard: https://www.productboard.com/glossary/product-prioritization-frameworks/

✨✨✨✨✨✨✨✨✨✨✨

I hope this guide gives you another perspective to other guides where these methods are described in a theoretical way. I also hope that you’ve discovered some more techniques than the ones you knew already. There are a lot more of course. Any 2×2 matrix (Impact/Effort, Importance/Urgency, Business Value/Customer Value, etc.), Prune the Product Tree, Weighted Scoring, Business Cases, PIE (Potential Importance Effort), etc.

But I would write infinitely if I wanted to explain them all 🙂

Cheers!